The 4 Levels of AI Agents: When to Use Workflows vs Autonomous Systems

Stop over-engineering simple problems and under-engineering complex ones

If you believe the headlines, AI agents are about to run your company. If you believe your budget, most “agents” barely get through a checklist. This series is for teams in between. We’ll cut through the branding, define what actually counts as an agent, and show when to ship simple workflows vs when to invest in dynamic, loop-driven systems. By the end, you’ll know which level of “agent-ness” to pick, for what, and when.

](/posts/2025-09-25-agents-intro/nvidia.png)

Discussion is even expanding to speculate about how organisations might be structured in the future:

"In a lot of ways, the IT department of every company is going to be the AI HR department of AI agents in the future. Today, they manage and maintain a bunch of software from the IT industry. In the future, they'll maintain, nurture, onboard and improve a whole bunch of digital agents and provision them to the company to use. Your IT department is going to become kind of like 'AI agent HR'." Jensen Huang, CEO Nvidia

We all know that vision is mostly hype today. But strip away the marketing buzz, and what's real and what exactly is an AI agent?

At Barnacle Labs, we've already deployed autonomous AI agents into production for clients that demonstrate exciting capabilities, so we thought it worth writing a few words to explain our perspective based on that experience.

The Agent Definition Puzzle

But here's the problem: ask ten people what an "AI agent" is, and you'll get eleven different answers.

At one extreme, you have Jensen Huang (CES keynote, Jan 2024) painting a grand vision in his recent CES keynote of AI agents as digital employees—autonomous entities that will revolutionise how we work, think, and live. Picture an AI that can handle your entire marketing campaign, manage your customer relationships, or run your business operations with minimal human intervention. It's an inspiring, almost sci-fi vision of artificial intelligence as colleague rather than tool.

At the other extreme, you have companies slapping the "agent" label on anything that uses an LLM to call an API. A chatbot that can check the weather? Agent. A script that uses GPT to summarise emails? Also an agent, apparently.

The term 'AI agent' has become overloaded. But before we can categorise the different types of agents, we need to understand what makes something an "agent" in the first place. Because here's the truth: there's no special "agent AI" - it's all about how we use the AI we already have.

What is an agent technologically?

OpenAI defines an agent simply as "systems that independently accomplish tasks on your behalf". While this captures the essence, other experts provide more specific technical definitions.

Anthropic, by contrast, provides a more architectural definition:

"'Agent' can be defined in several ways. Some customers define agents as fully autonomous systems that operate independently over extended periods, using various tools to accomplish complex tasks. Others use the term to describe more prescriptive implementations that follow predefined workflows. At Anthropic, we categorize all these variations as agentic systems, but draw an important architectural distinction between workflows and agents: Workflows are systems where LLMs and tools are orchestrated through predefined code paths. Agents, on the other hand, are systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks." — Anthropic's Building effective agents

Simon Willison, a prominent voice in the LLM community, offers a similarly focused definition:

"My preferred definition of an LLM agent is something that runs tools in a loop to achieve a goal." Designing Agentic Loops, Simon Willison

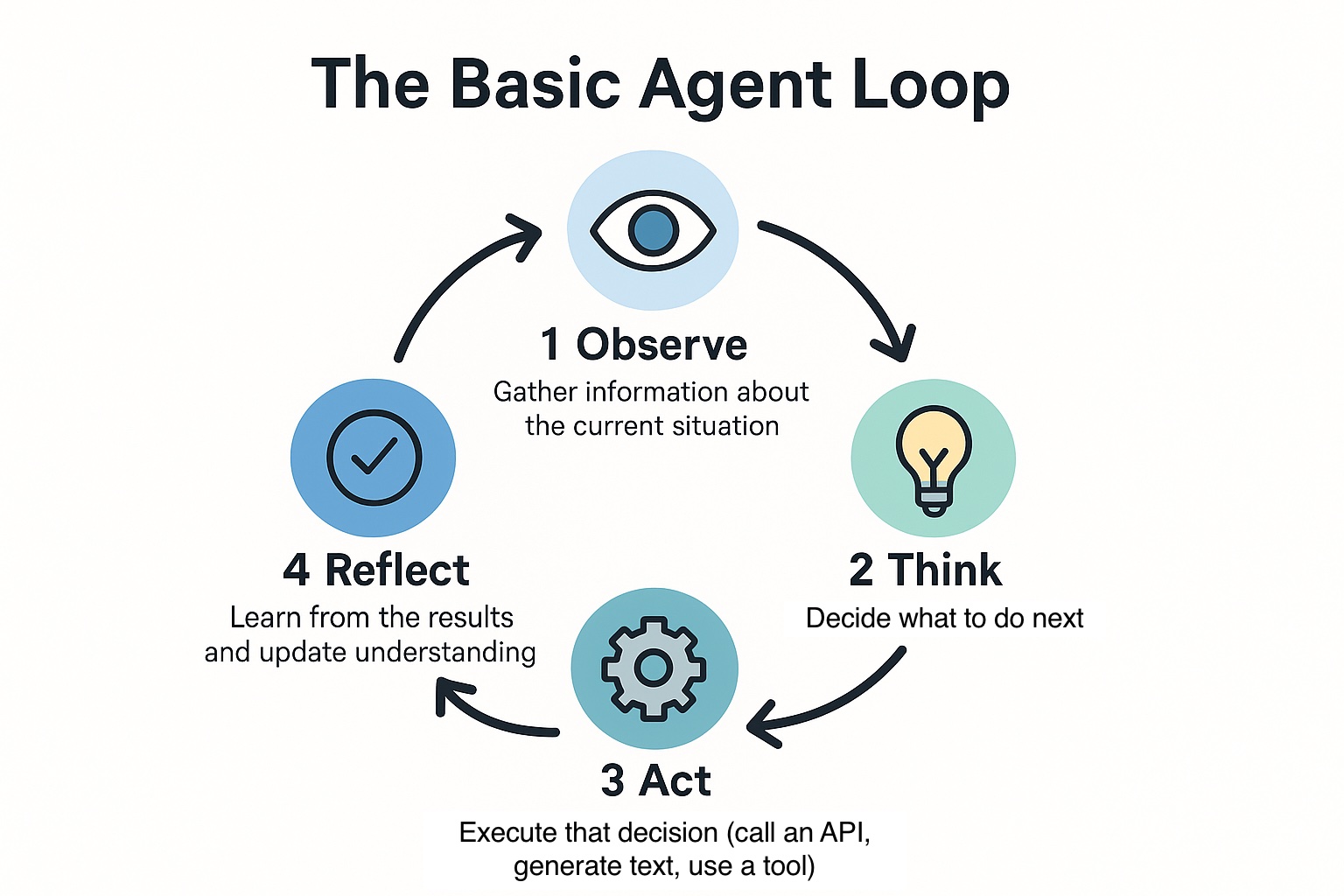

The 'Agent Loop'—a cycle of observing, thinking, acting, and reflecting—has emerged as the defining characteristic of true agents.

The Basic Agent Loop:

- Observe: Gather information about the current situation

- Think: Decide what to do next

- Act: Execute that decision (call an API, generate text, use a tool)

- Reflect: Learn from the results and update understanding...then repeat

The magic happens when this loop runs multiple times, with each iteration building on the last. Instead of cramming everything into one massive prompt, the AI develops understanding iteratively.

Think about it this way. If I asked you to "plan my vacation to Japan, book flights, learn Japanese, research culture, find hotels, and write a haiku"—you'd probably just stare at me. But if we had a conversation where I first asked about the best time to visit, then which cities match that timing, then flights for those cities—each answer informing the next question—you'd do brilliantly. The same principle applies to AI agents—breaking complex tasks into iterative steps dramatically improves outcomes.

A proper Agent Loop enables the AI to discover facts that change everything and pivot the strategy. A single prompt can never compete. Each iteration in the Agent Loop compounds understanding—like compound interest, but for thinking.

The Agent Loop is central to virtually all major agent frameworks - LangGraph, CrewAI, Google ADK, OpenAI agents and many others too numerous to list. Vercel's popular AI SDK has built in agent loop support that makes it trivial to code this loop, even if you're not using a more formal agent framework.

The distinction between simple AI calls and true Agent Loops is becoming an industry consensus. Both Anthropic's guide to building effective agents and Phil Schmid's analysis of agentic patterns draw the same critical line: workflows that follow predefined paths aren't really agents—true agents dynamically control their own execution through iterative loops. Both these articles are worth a read—they contain further valuable information about agents.

But here's the reality: Many of the things that people are calling "AI agents" are just traditional software that happens to call an LLM once. Others follow predetermined steps with no ability to adapt. Only some systems implement true Agent Loops with dynamic planning and iteration.

This distinction matters enormously. Let's map out the spectrum of what people actually call "agents"—from simple tools to truly autonomous systems...

The Spectrum of "Agent-ness"

The phrase AI agent describes a spectrum, not a binary state. Our framework acknowledges the wide variances in how the term is being used, whilst bringing structure to what we actually mean. Rather than make the meaningless statement that something is an AI agent, we'd prefer to say that it's "a Level-n AI agent"—which helps ground what we actually mean by the phrase.

| Level | Loop | Autonomy | Good for | Pitfalls |

|---|---|---|---|---|

| L1: AI-enhanced tools | None (one-shot) | None | UX polish, summaries | Over-branding as “agents” |

| L2: AI-driven workflows | Fixed path | Low–Med | Back-office automations | Brittle to edge cases |

| L3: Dynamic planning | Iterative loop | Med–High | Coding, research, ops | Guardrails, eval, cost |

| L4: Autonomous workers | Long-horizon loops | High | Long-term vision | Not yet practical |

Level 1: AI-Enhanced Tools

What they are: Traditional software with AI components for specific functions.

Imagine: Like riding a bicycle with a GPS app on your phone. The bike is still a manual vehicle, but the “smart” component gives you direction.

Example: A piece of software that calls a weather API and sends the result to an LLM in order for it to provide a natural language description of the weather (“it’s currently raining in London”).

Agent Loop: Doesn't loop at all - it's one-and-done. Call an API, pass to LLM, return result. There's no observation-action cycle.

Autonomy level: Virtually none—the AI is a smart component in a traditional system.

How practical today: Very practical—this is what most teams are building right now with readily available APIs and frameworks.

Are they "agents"? Barely. Describing an API you can call that happens to use an LLM as anything other than just a piece of software feels like a stretch. The lack of an agent loop means level-1 agents fail that test.

Level 2: AI-Driven Workflows

What they are: Systems where AI operates within predefined workflow processes, making tactical decisions along predetermined code paths. Unlike fully autonomous agents, the agent follows a predefined and structured sequence of steps, rather than using AI to determine its own strategy from scratch.

Imagine: Like a car with cruise-control and lane-assist. You still steer and choose routes, but the car makes tactical adjustments (speed, minor steering tweaks) for you within the confines of your decisions about where to drive.

Example: An expense report processor that reads receipts, categorises them by type, flags unusual amounts, and routes approvals based on company policy. This 'agent' always follows the same series of steps - it might use AI in each step, but those steps and the sequences are pre-defined. The proliferation of solutions built with products like n8n would be good examples of Level-2 Agents.

Agent Loop: Has a predetermined loop - the steps are fixed in code. The AI makes decisions within each step, but can't change the sequence.

Autonomy level: Limited—AI chooses how to execute steps but not which steps to take.

How practical today: Very practical—many production systems operate at this level with predictable costs and outcomes.

Are they "agents"? This is where the debate gets interesting. However, if you adhere to the agent loop definition, the answer is probably no.

Level 3: Dynamic Planning and Execution Systems

What they are: AI that can break down complex tasks, create plans, and execute them using available tools and/or sub-agents. The distinction from Level-2 is that the workflow of steps is not pre-defined and the AI is able to dynamically create a strategy and direct its own processes and tool usage.

Imagine: Like a semi-autonomous car (e.g. Tesla Autopilot) that can navigate highways, change lanes, and re-route dynamically—but still needs you to tell it where you want to go.

Example: A coding assistant that analyses a bug report, plans a fix across multiple files, implements the changes, and tests them.

Agent Loop: This is where true agent loops emerge. The AI observes results, plans next steps, and adjusts its approach dynamically. It might discover something that changes everything and pivot the entire strategy.

Autonomy level: Significant—AI controls strategy and tactics within defined boundaries.

How practical today: Becoming practical. Coding agents, in particular, have caught on and work extremely well. However, Level-3 agents are not straightforward to build, they need guardrails and the technology choices are confusing and not always well documented.

The depth of this complexity is often underestimated. Manus's engineering team rebuilt their agent framework four times, discovering challenges like KV-cache optimisation, context management, and attention manipulation that only emerge at scale.

Are they "agents"? Most people would say yes. Level-3 agents pass the agent loop test.

Level 4: Autonomous Digital Workers

What they are: AI systems that can operate independently over extended periods, handling complex, open-ended tasks with minimal human guidance.

Imagine: Like a fully autonomous robo-taxi network: no human driver, able to pick up passengers, adapt to city traffic, handle reroutes, and operate 24/7 across a city.

Example: Jensen Huang's vision—an AI that can manage an entire business function, adapting to changing conditions and making strategic decisions.

Agent Loop: Runs sophisticated loops over extended periods, maintaining long-term memory and strategic goals across multiple sessions.

Autonomy level: High—operates like a digital employee with broad decision-making authority.

How practical today: Mostly aspirational—limited real-world examples of sustained autonomous operation.

Are they "agents"? Definitely, though most are still more aspiration than reality.

Guardrails for systems that loop under AI control

- Bounds: max steps/time/cost per task.

- Tools: allowlist + strict schemas.

- Approvals: human review for writes/emails.

- Observability: log each loop; auto-abort on repeated no-progress.

Why This Matters

The key insight from our framework? It's not about the sophistication of the AI, it's about the level of decision-making autonomy you give it.

All four levels can be valuable agentic systems. The problem you want to solve and the level of budget and skill available to solve that problem will often dictate what level you operate at.

Understanding where your system falls on this spectrum isn't academic hair-splitting, it has real implications:

- Cost, skills and complexity: True agents (level 3+) are more expensive, require more skill to build and are more complex, than simpler tools and workflows.

- Testing and validation: Autonomous systems require different testing approaches than deterministic workflows.

- Risk management: Higher autonomy means higher potential for both meaningful breakthrough and spectacular failures.

- Technology choices: Complex agents require sophisticated technology stacks and frameworks that simpler problems don't need. For straightforward use cases, you'll often achieve better results at lower risk/cost by avoiding unnecessary complexity and sticking to proven, lightweight solutions.

Case Study: Level 3 Agents in Production

At Barnacle Labs we recently built a Level 3 agent system for a hospitality client that demonstrates these capabilities are more accessible than many might assume.

The Challenge: Build a customer service system that could handle diverse queries—from finding static information from blog posts and FAQs, to checking real-time pricing and availability through APIs into core systems.

The System:

- RAG tool for accessing documentation that helps answer FAQ type questions

- Content Management System (CMS) for the client to add additional source text that can then be used by the RAG tool

- Tools for real-time pricing and inventory APIs

- Tool for Google Maps integration for location-based queries

- Built using a custom agentic framework

- 3 months from kickoff to completion

Agent Framework Choice We evaluated several popular frameworks but found that for our specific requirements, a lightweight custom implementation offered better visibility and control.

We ended up using a simple tool loop in our code. The key lesson from the framework evaluations and tests was that most agent frameworks, whilst providing a lot of valuable functionality, also bring additional complexity that wasn't needed for this specific project.

Level 3 Agent Behavior in Action: The key distinction from a Level 2 workflow: the agent dynamically chooses which tools to use and how many times to call them. Nothing is fixed or scripted, the text of all answers is generated in real-time by the AI and the AI decides the strategy for answering questions.

This approach revealed emergent capabilities we hadn't explicitly designed for. When users asked comparison questions—"What's the difference between gold and silver rooms?"—the agent correctly reasoned that it needed to:

- Make a tool call to query details for the gold room

- Make a tool call to query details for the silver room

- Synthesise a comparison from both results

No if-then-else logic. No predefined comparison workflow. The agent simply figured out the strategy without any input from us.

Results The system is now live on the homepage of a major hospitality provider, handling 200+ customer queries daily.

Key outcomes:

- 95%+ first-contact resolution - most queries resolved without human escalation

- CMS-powered extensibility - subject-matter experts can add new content into the CMS, without any code or AI changes

- Zero production incidents - the AI-controlled tool selection has operated without problems

Key Lessons:

- Tool descriptions need to be extremely clear and distinct - we built a translation layer to simplify complex legacy APIs with 20+ parameters into focused, single-purpose tools. This dramatically improved tool call success rates

- Guardrails are essential (we capped the number of tool call loop iterations)

- The flexibility to handle edge cases without code changes justified the complexity

- Many agent frameworks bring significant extra complexity/risk that might not be needed for a specific project

- DIY agent solutions might sound risky, but the reality is that building around a simple tool loop can be easier to control than a full agent framework

This wasn't an exotic research project—it was a pragmatic business system that solved real problems and demonstrates that Level 3 agents are achievable today with the right approach.

Concluding Thoughts

The Reality Check: Level 1 systems aren't really agents—they're just traditional software with AI components. And Level 4 autonomous digital workers remain aspirational, not practical reality. This leaves us with the middle ground: Levels 2 and 3.

The Practical Sweet Spot: For most organisations, Level 2 (AI-Driven Workflows) hits the right balance. Fixed workflows—"do this, then this, and depending on the result, do that"—solve real problems with manageable complexity, predictable costs, and maintainable systems. Don't underestimate the value of simplicity when it works. However, these workflows aren't true agents if you adhere to Anthropic's definition. But don't let semantics stop you from gaining the value.

"Always seek the simplest solution first. If you know the exact steps required to solve a problem, a fixed workflow or even a simple script might be more efficient and reliable than a agent." — Zero to One: Learning Agentic Patterns.

The True Agent Frontier: Level 3 is where genuine agent behaviour emerges. These systems implement real Agent Loops with dynamic planning and strategy adaptation. They can be more complex to build, require a bit more guardrail thinking, and so require skilled engineers. But they're also where AI starts to feel genuinely transformative rather than just enhanced automation. A simple agent loop in code is easy to setup and with care, Level 3 agents are more achievable than you might assume.

The Bottom Line: Pick the least autonomy that reliably solves your problem. Most teams should start at L2 for speed and predictability; move to L3 when adaptability materially changes outcomes. But always remember: not every problem needs an autonomous agent. Sometimes the best agent is the one you don't build.